The goal of this lab is to develop knowledge on how to properly process and identify features within hyperspectral remotely sensed data. Particularly this lab taught me how to detect noise in hyperspectral data and remove the spectral channels which have excess noise and how to detect target features within hyperspectral images using reflectance spectra.

Methods

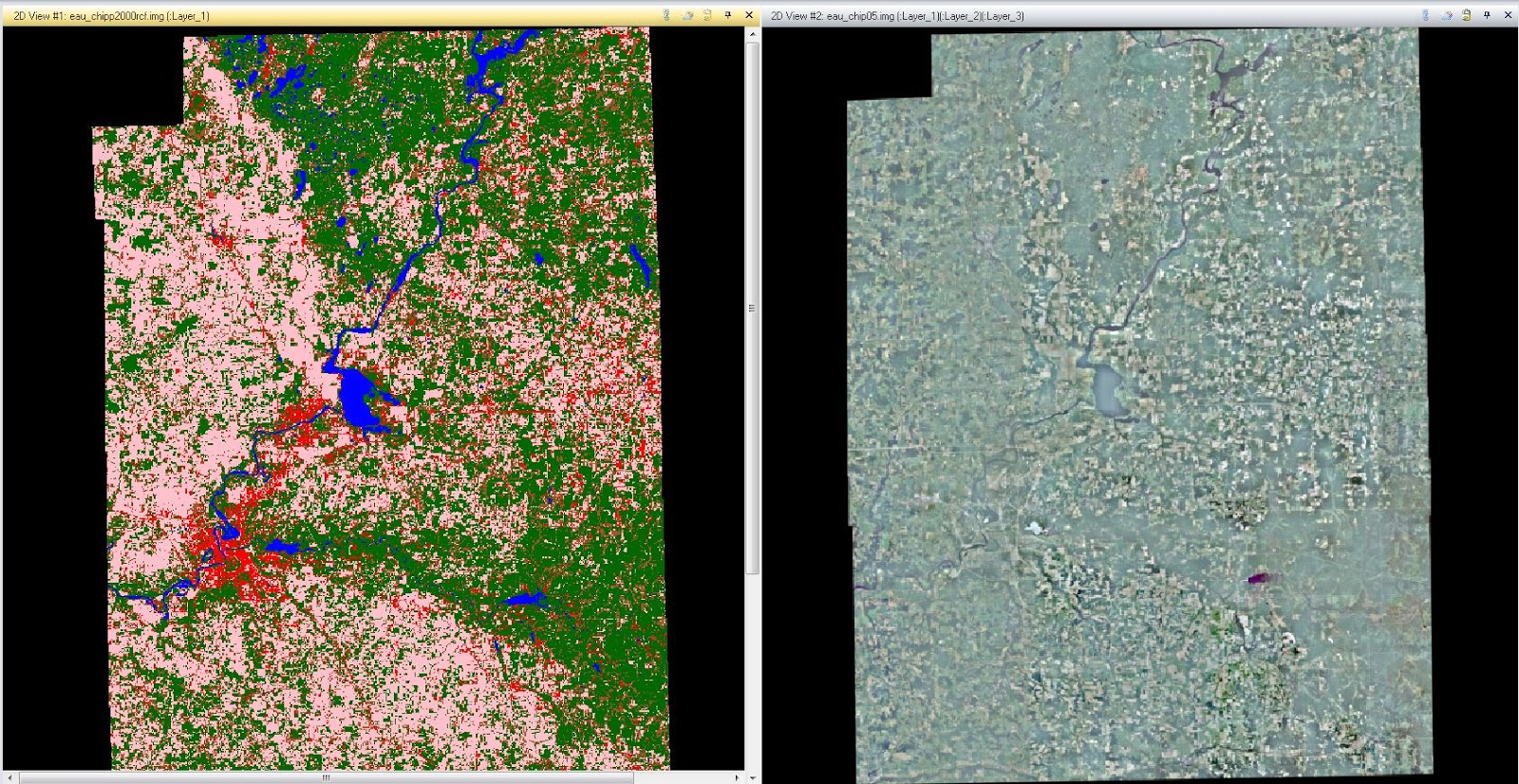

In the first portion of the lab I used the spectral analysis workstation in ERDAS Imagine. However, before utilizing this tool I detected anomaly within the hyperspectral data provided for this lab. To perform this type of detection I used the anomaly detection function in ERDAS Imagine. Anomaly detection can be defined as the process of searching an input image in order to identify pixels which have spectral signatures which are greatly different than most of the other pixels in the image. Put simply, it asks the question "is there anything unusual in the image?". The first step in this process after opening the anomaly detection wizard was to select the input image I was given in the dialog which uses the option of image only. Next, I kept the threshold number at the default and ran the wizard selecting the option to create an output file and proceed to the workstation. The workstation I am referring to is the spectral analysis workstation. Once the processing of the anomaly detection is complete the spectral analysis workstation will open and the anomaly mask will be displayed (Fig. 1). There are some white areas which appear among the black background, to adjust this I selected the "swipe" tool from the menu and moved the swipe position in order to see its effect on the image. In summary, the main point of conducting the anomaly detection is to help and identify bad bands which should be removed from the analysis. Identification of bad bands is very important because of the very large number of bands used in hyperspectral remote sensing.

(Fig. 1) The output image of the anomaly detection can be shown above. The regions which are shown in white are where the anomalies are present within the input image.

Since there are so many bands collected some datasets could have been corrupted based on the absorption of particularly wavelengths because of issues with the sensor or atmospheric distortion. If these bad bands are included in the metric algorithms the calculations it creates can be incorrect. To determine which are the bad bands, I ran the anomaly detection wizard again selecting the option of "bad band selection tool" which then opened a display in the spectral analysis workspace. Within the bad band selection tool was a preview of the image, the data histogram and the mean plot of the selected bands. I selected the bands which were provided for us by my professor and classified them as "bad bands" (Fig. 2). Once all the bands were selected I ran the program and opened the new output image in the spectral analysis workstation (Fig. 3).

(Fig. 2) The bad band selection tool allows the user to select some of the bands within the 224 in this particular hyperspectral image which should not be used in the output image analysis.

(Fig. 3) The output image of the anomaly detection after removing the bad bands from the original image shows a greater amount of anomaly compared to Fig. 1.

The next process I conducted on hyperspectral images was target detection. I first used the simple target detection method and then target detection using spectral libraries. Target detection is a process which searches a hyperspectral image for a specific material (or target) which is thought to be only present in low amounts. Using the target detection wizard, I created a new project and then within the target detection wizard selected the target spectrum selection process. In the simple target detection method I inputted a spectral library provided to me through this lab. For the target detection where I used spectral libraries I used data from the USGS spectral library to yield my results. In this process however, it was a bit more of a complex method as I needed to view the sensor information tool to make sure that the spectral library data matched that of my image. I also excluded the bad bands from my final output by using the same process as shown above.

Results

By using these methods in hyperspectral remote sensing, there were different results. For instance, the anomaly detection using the bad band exclusion was much more effective at detecting anomalies within the hyperspectral image compared to the other method of anomaly detection which used all the bands. As for the target detection methods both the simple and spectral library methods proved to yield equally accurate data.

Sources

All the data used in this lab exercise was from ERDAS Imagine 2010.