Goals and Background

The main goal of this lab is to practice atmospherically correcting remotely sensed images. Throughout this lab the image analyst will further develop their skills in atmospheric correction using multiple methods. These methods include: absolute atmospheric correcting using empirical line calibration, absolute atmospheric correction using enhanced image based dark object subtraction, and relative atmospheric correction using multidate image normalization.

Methods

Absolute Atmospheric Correcting: Using ELC (Empirical Line Calibration)

Throughout this part of the lab we will be using the ELC method which matches in situ data to the remotely sensed data which is collected at the same time the aerial image is taken over the area of interest. ELC can be calculated by the following equation: CRk = DNk * Mk + Lk where CRk is the corrected digital output pixel values for a band, DNk is the band which should be corrected, Mk which is a multiplicative term which affects the brightness values and Lk is an additive term.

The Mk value acts as the gain and the Lk acts as the offset which are used to create the regression equations used between the spectral reflectance measurement of the in situ data and the spectral reflectance measurement of the sensor for the same area.

In order to perform this type of atmospheric correction, we will use the Spectral Analysis Work Station in ERDAS Imagine 2013. The first step is to then open an analysis image, which is the image you want to correct. Once it has been added, then the next step is to click on the "edit atmospheric correction" option and select empirical line as the method (Fig. 1).

(Fig. 1) The atmospheric adjustment tool in the spectral analysis workstation is used to conduct the ELC atmospheric correction.

Once this window is open it is time to begin collecting samples and identify references from various spectral libraries in order to conduct the ELC. To do this we will first start by taking a sample of a road. We need to find a road surface feature in our image, select the color grey and then use the "create a point selector" tool it carefully select the middle of a road feature. This will add a line to the graph in the bottom right corner of the atmospheric adjustment tool window. The next task is to add the in situ spectral reflectance signature of an asphalt surface from the ASTER spectral library. Once this has been added you can see the difference between the signature of the image you are correcting compared to the spectral signature for that particular surface feature type (Fig. 2).

(Fig. 2) This image shows the spectrum plot for asphalt. The sample is from a road on our original image while the reference is from the ASTER spectral library.

We will then continue this process for surface features including: vegetation/ forest, aluminum rooftop, and water. Now we will execute the ELC atmospheric correction. The spectral analysis workstation has created the regression equations for each of the bands in the image. After saving the regression information, we will then go back to the spectral analysis workstation to select the preprocess and atmospheric adjustment. After this has been run you will end up with the final output image which has been atmospherically corrected using the empirical line calibration method (Fig. 3).

(Fig. 3) The original image has been atmospherically corrected using the ELC (empirical line calibration) method.

Absolute Atmospheric Correcting: Using Enhanced Image Based Dark Object Subtraction

The next method of atmospheric correction we will be using is the DOS (dark object subtraction) method. This process employees a number of parameters to atmospherically correct an image including: sensor gain, offset, solar zenith angle, atmospheric scattering, solar irradiance, absorption and path radiance. To execute this method requires two steps, first is the conversion of the image taken by the satellite to an at-satellite spectral radiance image. Second is the conversion of the at-satellite image to the true surface reflectance.

Step 1:

For step one we will first open model maker in ERDAS Imagine 2013. We will create 6 independent models in the same model maker window to save time. The input raster image will be each individual band from the original image we are wanting to atmospherically correct. The formula will be created using the formula seen in Fig. 4. Much of the data can be found in the overall image metadata. Once the formula is added to the model maker and the output images are saved in the correct place, the model can be run (Fig. 5).

(Fig. 4) The formula used to convert he original satellite image to an at-satellite spectral radiance image. (Formula provided by Dr. Cyril Wilson of the University of Wisconsin- Eau Claire).

(Fig.5) The model maker module for the first step of the DOS method should include individual models for each of the 6 bands using the individual data found in the band metadata in the formula from Fig. 4.

Step 2:

Step two is basically the same process as used in step 1 however the formula is different. The formula (Fig. 6) includes measuring path radiance which is the distance between the origin of the histogram to the start of the actual histogram. This formula also uses the solar zenith angle which is a constant value for all the bands and the distance between the sun and Earth. This distance depends on the day of the year and can be found in a chart which lists these values. The next step is then to set up another model maker window with 6 individual models the same way we did in step 1 only using the new formula shown in Fig. 6. The new model will convert the at-satellite spectral reflectance to the true surface reflectance (Fig. 7).

(Fig. 6) This formula is used in the second step of the DOS method of atmospheric correction which will convert at-satellite spectral radiance to the true surface reflectance values.

(Fig. 7) The model maker module for the second step of the DOS method should include individual models for each of the 6 bands using the individual data found in the band metadata in the formula from Fig. 6.

Final Step:

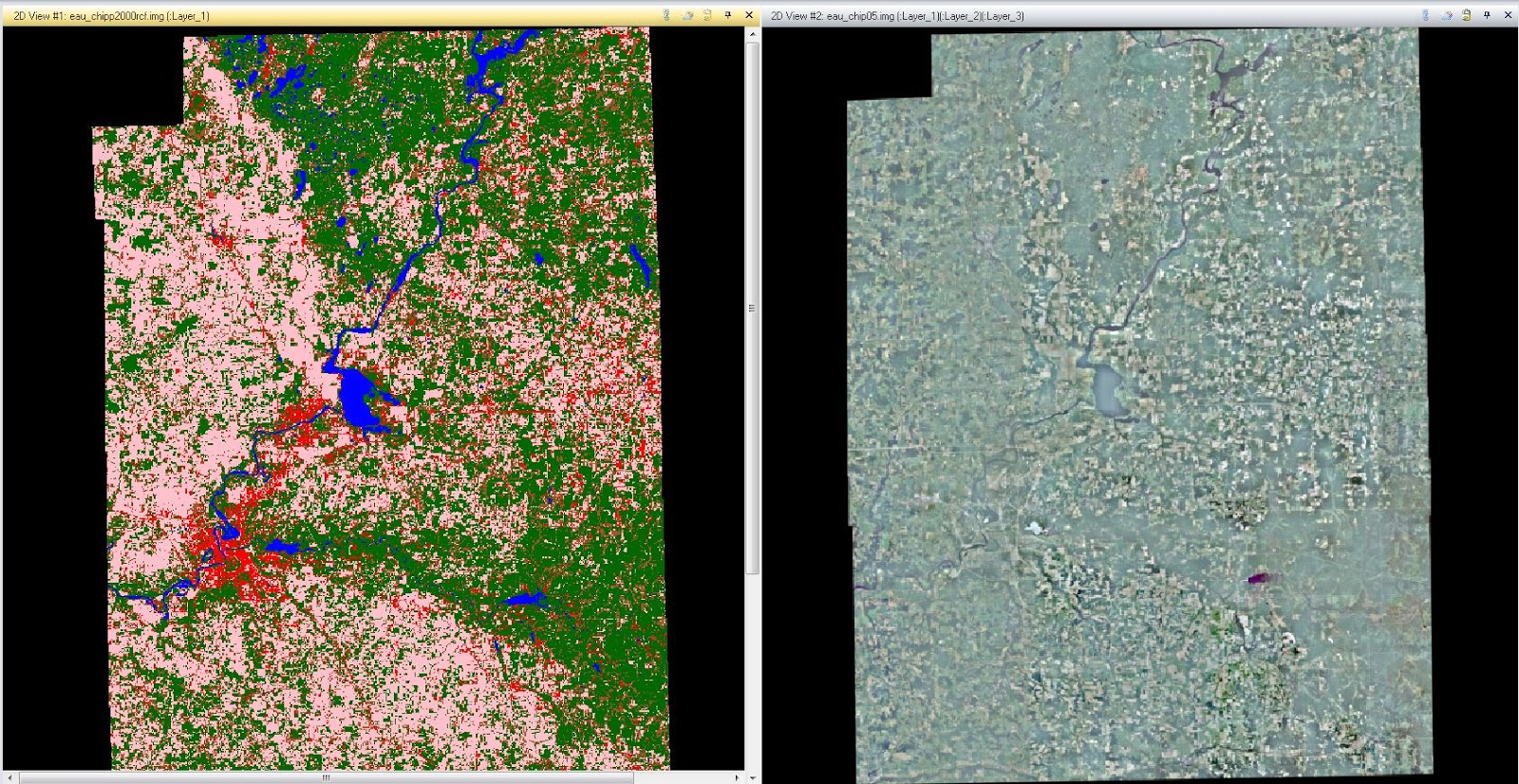

After both of the above steps have been completed it is time to stack the 6 images produced from the second step. Once they have been stacked you can see the final output image compared to the original (Fig. 8).

(Fig. 8) The image above shows the original image on the left and the atmospherically corrected image on the right using the DOS method.

Relative Atmospheric Correction: Using Multidate Image Normalization

The last method we will be using for atmospheric correction in this lab is multidate image normalization. This is usually something a method used when absolute atmospheric correction is not possible due to a lack of in situ data or metadata for a remotely sensed image. To perform this type of atmospheric correction we will need to have both the Chicago 2000 image and the Chicago 2009 image open at the same time in ERDAS Imagine 2013. First we will link and synchronize the images to find various surface features. Once we find the first point, a rooftop at O'Hare International Airport, we will open the spectral profile tool under he multispectral option. Making sure to unlink and unsync the two images before creating a profile point in the same place in each image we will collect the first spectral profile for each of the images. This means each image will need its own spectral profile. We will continue to collect more profile points throughout various surface features on the map. It is important that the points are taken at the same place in both of the images. For this lab we will be taking at total of 15 spectral signature points: 5 in Lake Michigan, 5 sin urban/built-up areas, and 4 four internal lakes (including the original point taken at the O'Hare Airport). After all the points have been taken the final spectral profiles should have all the point data on the individual graph for the Chicago 2000 and Chicago 2009 image (Fig. 9).

(Fig. 9) Once all the spectral signatures have been collected from both of the images they will have spectral profiles which contain all 15 points for each of the images.

The next step is to click on the tabular data view in the spectral profile window. This data shows the actual pixel data of the samples collected from the images. To organize this data we will create a chart in Microsoft Excel that contains 15 rows (for the 15 samples collected) and 6 columns (for each of the six bands that make up each image). We will be making two separate charts, one for the Chicago 2000 image tabular data and the other for the Chicago 2009 image tabular data (Fig. 10). These charts will contain the means found in each band. Next, we will create a scatter plot graph for each band which includes both the mean values for both the 2000 image and the 2009 image. (A total of 6 graphs will be produced.) For each of the graphs add a regression line. The slope data represents the gain and the y intercept represents the bias. This data will then be used in the formula used to correct the image via the normalization method (Fig. 10). The next step is to open model maker once again and create 6 models in the same window as has been done earlier in the lab. Each band of the Chicago 2009 image will act as a separate input image and the formula from Fig. 10 will be used with the respective data for each band (Fig. 11). After the model maker has been run the next step is to stack the layers to produce the final output image. Once this is done you can compare the original Chicago 2000 image to the atmospherically corrected image (Fig. 12).

(Fig. 10) This is the formula used when conducting relative atmospheric correction using the multidate image normalization method.

(Fig. 11) The model maker used for the multidate image normalization method uses each of the individual bands from the Chicago 2009 image and the formula from Fig. 10 to produce the output image.

(Fig. 12) The original image can be seen on the right while the atmospherically corrected image (using the multidate image normalization method) can be seen on the right.

Results

After completing this lab, the image analyst will have the skills to perform both absolute and relative atmospheric correction. These methods include empirical line calibration, enhanced image based dark object subtraction and multidate image normalization. These various methods have their own strengths and weaknesses however, the analyst now has the knowledge to apply these correction methods to remotely sensed data of all kinds.

Sources

The data used throughout this lab exercise was collected from the following sources: Landsat satellite image is from Earth Resources Observation and Science Center, United States Geological Survey. All data was provided by Dr. Cyril Wilson of the University of Wisconsin- Eau Claire.